AP的解释

可能的Bug

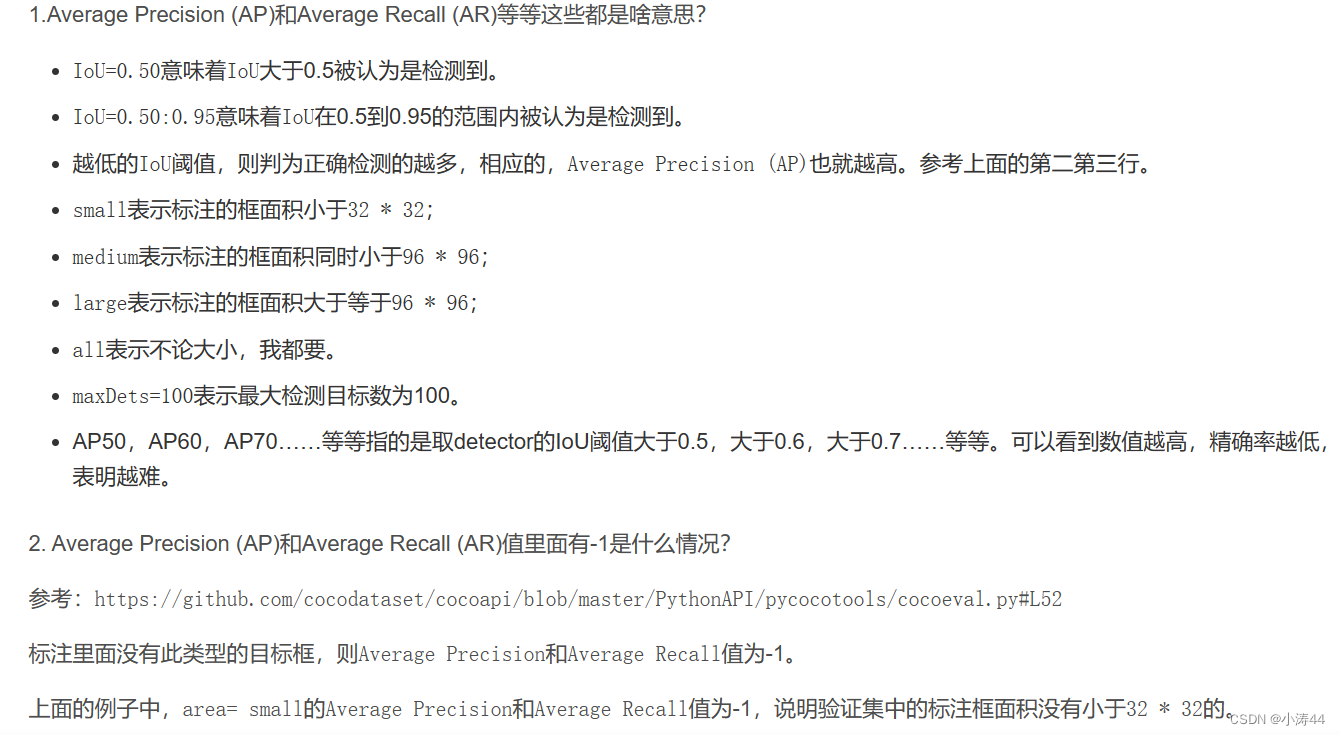

问题

验证的时候评价指标输出是酱紫的

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.177

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=1000 ] = 0.608

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=1000 ] = 0.057

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=1000 ] = 0.293

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=1000 ] = -1.000

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=1000 ] = -1.000

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.292

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=300 ] = 0.292

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=1000 ] = 0.292

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=1000 ] = 0.292

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=1000 ] = -1.000

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=1000 ] = -1.000

可见area= medium和area=large的AP值为-1,不知道是不是bug导致我的mAP很低,翻了一圈找到了以下解释,总算是弄懂了

AP的解释

首先得搞懂这些AP都代表什么意思,为什么就medium和large的AP值为-1

所以说,这和验证集里标注框面积有关,得检查自己的数据集如果数据框的都是比较小的,中型和大型的自然就没有,所以无法评判(从Github上的回答来看,源码里对于没有的就设置为-1)

可能的Bug

那如果说确实有中大型的检测框,还是-1,那是为啥呢?

那可能是coco数据集的json文件有问题了,在json2coco.py转换时area恒等于1了

因此找到并修改

# annotation['area'] = 1.0 # 导致medium和large的ap都是-1 annotation['area'] = annotation['bbox'][2] * annotation['bbox'][3]

就可以了~