经过一番折腾后,终于实现了想要的效果。经过一番的测试,发现运行的表现还不错,因此这里记录一下。

sherpa-ncnn相关动态库编译

首先是下载sherpa-ncnn这个工程进行编译,我们可以直接从这个github的地址下载:sherpa-ncnn;如果无法访问github或无法下载的,我已将相关资源及编译好的动态库放在文章的开头。

下载完成后,按照k2-fsa.github上文档的步骤,使用ndk进行编译。实际这里在文档中写得已经比较清楚了,只需要按照上面的步骤进行即可。当然这里我也记录下我的编译过程:

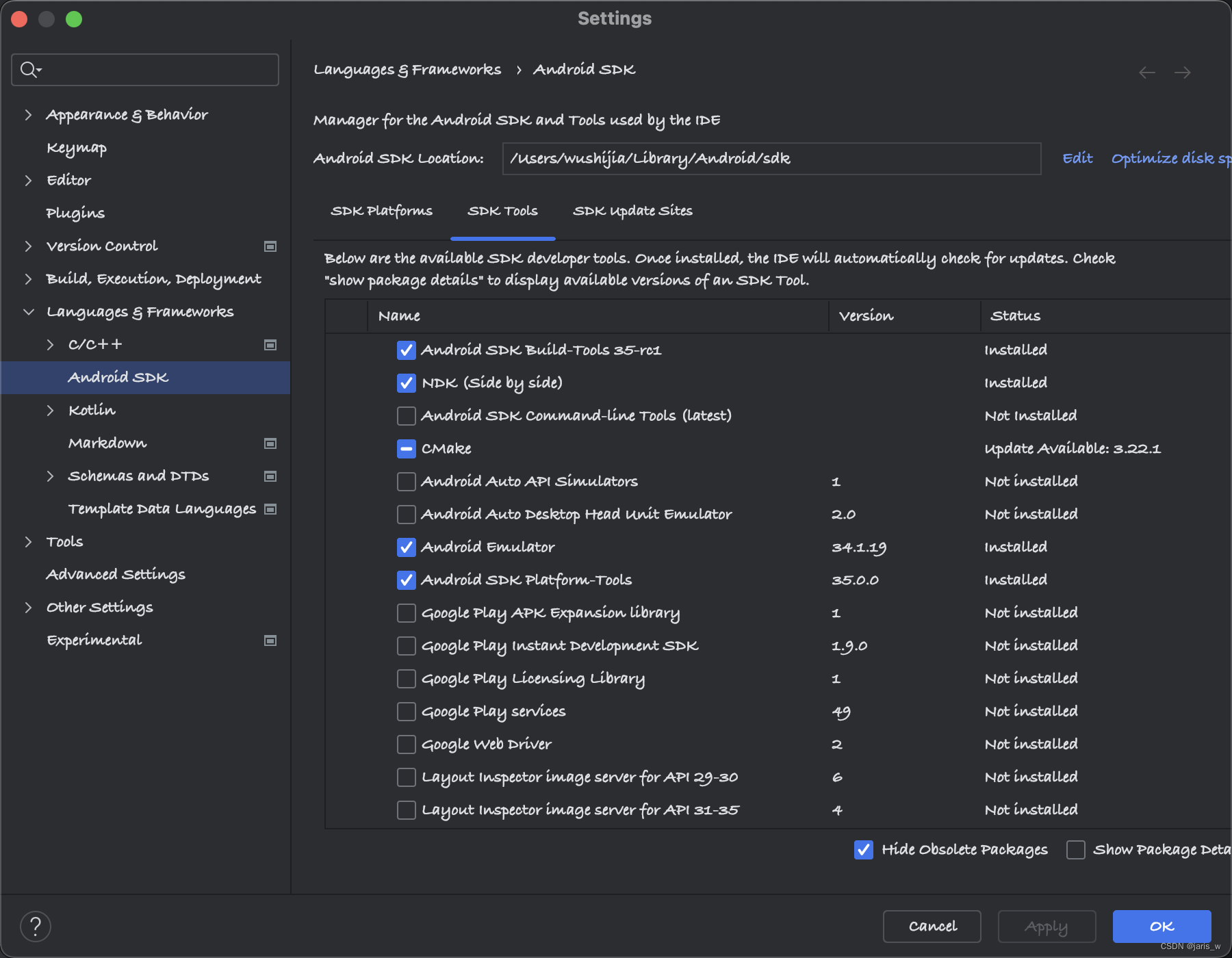

首先在Android Studio中安装ndk,下面是我已经安装好的截图:

从上图可以看到安装完成后ndk所在的路径,我这里就是/Users/wushijia/Library/Android/sdk/ndk,在该路径中可以看到我们已经安装的内容;这里也给出我的文件夹截图,当然我已经安装过多个ndk版本,所以效果如下:

接着就是按照文档的步骤,对动态库进行编译。我们可以现在命令行中,进入sherpa-ncnn-master工程目录,然后设置ndk环境变量。根据我的ndk路径,这里我选用版本最高的一个ndk路径,执行下面的命令:

export ANDROID_NDK=/Users/wushijia/Library/Android/sdk/ndk/26.2.11394342

上面的命令只是对当前命令行临时的设置环境变量,当然我就不去修改系统文件了;可能退出后就失效了,所以只是在编译动态库的时候设置一下,这里要注意。

设置好环境变量后,我们就可以在sherpa-ncnn-master工程路径中分别执行如下几个命令文件:

./build-android-arm64-v8a.sh

./build-android-armv7-eabi.sh

./build-android-x86-64.sh

./build-android-x86.sh

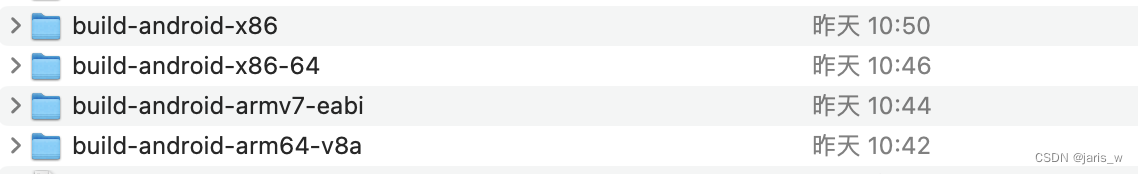

命令执行成功后,我们会在脚本的同级目录下得到如下几个文件夹:

在每个文件夹中都会包含相应的install/lib/*.so文件,这就是Android中所需的动态库了。

Android工程整合

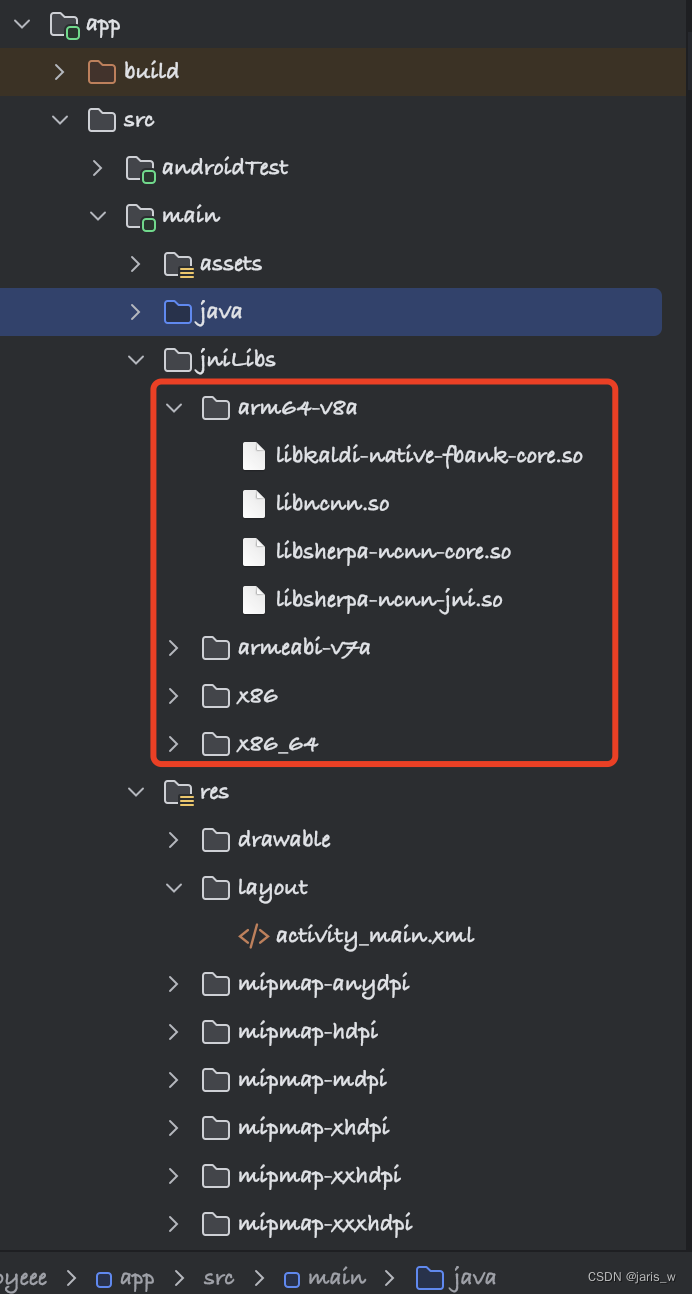

接下来将对应的动态库复制到Android Studio工程jniLibs中对应的目录,效果如下:

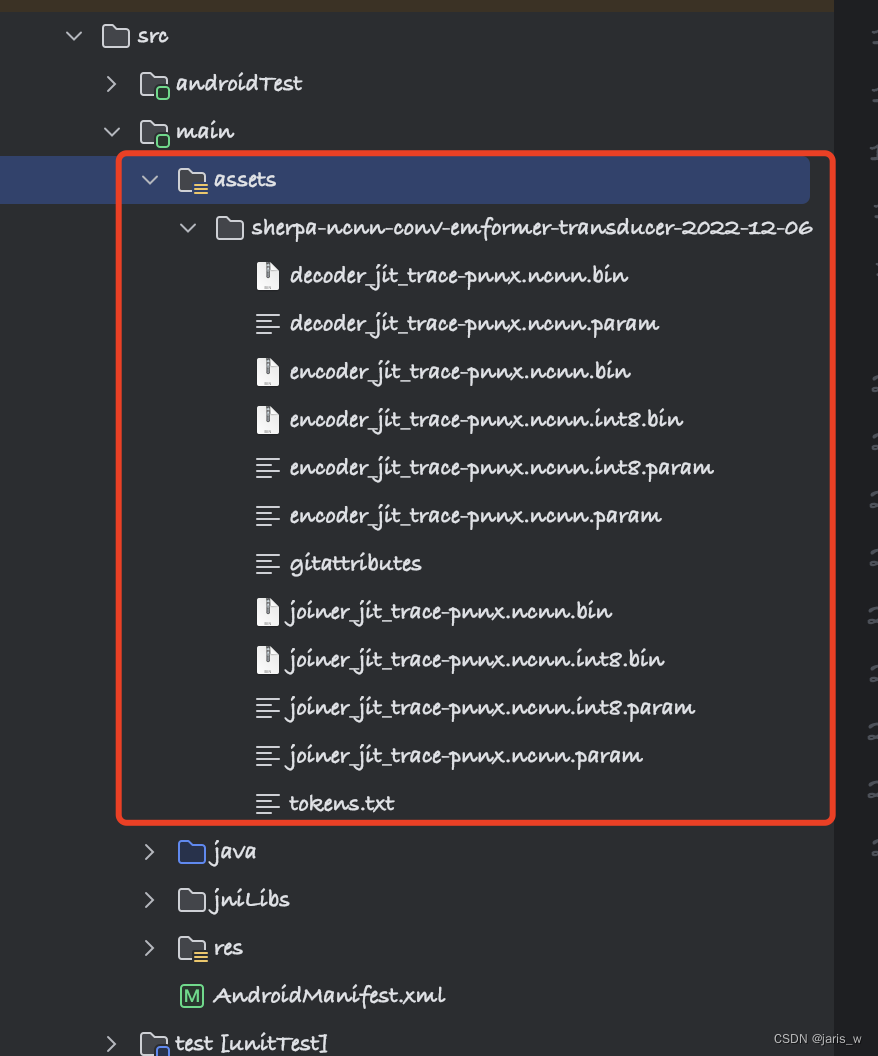

在上一步完成之后,我们还要将预训练模型拷贝到Android工程中,效果如下:

在完成上一步之后,我们就可以开始调用了。

这里读取文件的方式,我引用了一个别人的kotlin代码来读取模型文件。所以在我的工程中实现的方式变成了java代码调用kotlin代码,虽然有些混乱,但也勉强能用。我的java文件夹如下:

这里需要注意的是,由于Android中动态库的调用是需要和java包名关联的,所以我们引用别人的动态库,包名也必须按照别人的规则来。所以SherpaNcnn.kt文件所在的包名必须是k2fsa.sherpa.ncnn,否则运行时会提示动态库里找不到相应函数。这里总不能去修改动态库的内容吧,又要修改C++源码,直接按照别人的包名来是最方便的。

接着给出SherpaNcnn.kt的完整文件代码,当然大家也可以从文章开头的资源文件中下载:

package com.k2fsa.sherpa.ncnn

import android.content.res.AssetManager

data class FeatureExtractorConfig(

var sampleRate: Float,

var featureDim: Int,

)

data class ModelConfig(

var encoderParam: String,

var encoderBin: String,

var decoderParam: String,

var decoderBin: String,

var joinerParam: String,

var joinerBin: String,

var tokens: String,

var numThreads: Int = 1,

var useGPU: Boolean = true, // If there is a GPU and useGPU true, we will use GPU

)

data class DecoderConfig(

var method: String = "modified_beam_search", // valid values: greedy_search, modified_beam_search

var numActivePaths: Int = 4, // used only by modified_beam_search

)

data class RecognizerConfig(

var featConfig: FeatureExtractorConfig,

var modelConfig: ModelConfig,

var decoderConfig: DecoderConfig,

var enableEndpoint: Boolean = true,

var rule1MinTrailingSilence: Float = 2.4f,

var rule2MinTrailingSilence: Float = 1.0f,

var rule3MinUtteranceLength: Float = 30.0f,

var hotwordsFile: String = "",

var hotwordsScore: Float = 1.5f,

)

class SherpaNcnn(

var config: RecognizerConfig,

assetManager: AssetManager? = null,

) {

private val ptr: Long

init {

if (assetManager != null) {

ptr = newFromAsset(assetManager, config)

} else {

ptr = newFromFile(config)

}

}

protected fun finalize() {

delete(ptr)

}

fun acceptSamples(samples: FloatArray) =

acceptWaveform(ptr, samples = samples, sampleRate = config.featConfig.sampleRate)

fun isReady() = isReady(ptr)

fun decode() = decode(ptr)

fun inputFinished() = inputFinished(ptr)

fun isEndpoint(): Boolean = isEndpoint(ptr)

fun reset(recreate: Boolean = false) = reset(ptr, recreate = recreate)

val text: String

get() = getText(ptr)

private external fun newFromAsset(

assetManager: AssetManager,

config: RecognizerConfig,

): Long

private external fun newFromFile(

config: RecognizerConfig,

): Long

private external fun delete(ptr: Long)

private external fun acceptWaveform(ptr: Long, samples: FloatArray, sampleRate: Float)

private external fun inputFinished(ptr: Long)

private external fun isReady(ptr: Long): Boolean

private external fun decode(ptr: Long)

private external fun isEndpoint(ptr: Long): Boolean

private external fun reset(ptr: Long, recreate: Boolean)

private external fun getText(ptr: Long): String

companion object {

init {

System.loadLibrary("sherpa-ncnn-jni")

}

}

}

fun getFeatureExtractorConfig(

sampleRate: Float,

featureDim: Int

): FeatureExtractorConfig {

return FeatureExtractorConfig(

sampleRate = sampleRate,

featureDim = featureDim,

)

}

fun getDecoderConfig(method: String, numActivePaths: Int): DecoderConfig {

return DecoderConfig(method = method, numActivePaths = numActivePaths)

}

/*

@param type

0 - https://huggingface.co/csukuangfj/sherpa-ncnn-2022-09-30

This model supports only Chinese

1 - https://huggingface.co/csukuangfj/sherpa-ncnn-conv-emformer-transducer-2022-12-06

This model supports both English and Chinese

2 - https://huggingface.co/csukuangfj/sherpa-ncnn-streaming-zipformer-bilingual-zh-en-2023-02-13

This model supports both English and Chinese

3 - https://huggingface.co/csukuangfj/sherpa-ncnn-streaming-zipformer-en-2023-02-13

This model supports only English

4 - https://huggingface.co/shaojieli/sherpa-ncnn-streaming-zipformer-fr-2023-04-14

This model supports only French

5 - https://github.com/k2-fsa/sherpa-ncnn/releases/download/models/sherpa-ncnn-streaming-zipformer-zh-14M-2023-02-23.tar.bz2

This is a small model and supports only Chinese

6 - https://github.com/k2-fsa/sherpa-ncnn/releases/download/models/sherpa-ncnn-streaming-zipformer-small-bilingual-zh-en-2023-02-16.tar.bz2

This is a medium model and supports only Chinese

Please follow

https://k2-fsa.github.io/sherpa/ncnn/pretrained_models/index.html

to add more pre-trained models

*/

fun getModelConfig(type: Int, useGPU: Boolean): ModelConfig? {

when (type) {

0 -> {

val modelDir = "sherpa-ncnn-2022-09-30"

return ModelConfig(

encoderParam = "$modelDir/encoder_jit_trace-pnnx.ncnn.param",

encoderBin = "$modelDir/encoder_jit_trace-pnnx.ncnn.bin",

decoderParam = "$modelDir/decoder_jit_trace-pnnx.ncnn.param",

decoderBin = "$modelDir/decoder_jit_trace-pnnx.ncnn.bin",

joinerParam = "$modelDir/joiner_jit_trace-pnnx.ncnn.param",

joinerBin = "$modelDir/joiner_jit_trace-pnnx.ncnn.bin",

tokens = "$modelDir/tokens.txt",

numThreads = 1,

useGPU = useGPU,

)

}

1 -> {

val modelDir = "sherpa-ncnn-conv-emformer-transducer-2022-12-06"

return ModelConfig(

encoderParam = "$modelDir/encoder_jit_trace-pnnx.ncnn.int8.param",

encoderBin = "$modelDir/encoder_jit_trace-pnnx.ncnn.int8.bin",

decoderParam = "$modelDir/decoder_jit_trace-pnnx.ncnn.param",

decoderBin = "$modelDir/decoder_jit_trace-pnnx.ncnn.bin",

joinerParam = "$modelDir/joiner_jit_trace-pnnx.ncnn.int8.param",

joinerBin = "$modelDir/joiner_jit_trace-pnnx.ncnn.int8.bin",

tokens = "$modelDir/tokens.txt",

numThreads = 1,

useGPU = useGPU,

)

}

2 -> {

val modelDir = "sherpa-ncnn-streaming-zipformer-bilingual-zh-en-2023-02-13"

return ModelConfig(

encoderParam = "$modelDir/encoder_jit_trace-pnnx.ncnn.param",

encoderBin = "$modelDir/encoder_jit_trace-pnnx.ncnn.bin",

decoderParam = "$modelDir/decoder_jit_trace-pnnx.ncnn.param",

decoderBin = "$modelDir/decoder_jit_trace-pnnx.ncnn.bin",

joinerParam = "$modelDir/joiner_jit_trace-pnnx.ncnn.param",

joinerBin = "$modelDir/joiner_jit_trace-pnnx.ncnn.bin",

tokens = "$modelDir/tokens.txt",

numThreads = 1,

useGPU = useGPU,

)

}

3 -> {

val modelDir = "sherpa-ncnn-streaming-zipformer-en-2023-02-13"

return ModelConfig(

encoderParam = "$modelDir/encoder_jit_trace-pnnx.ncnn.param",

encoderBin = "$modelDir/encoder_jit_trace-pnnx.ncnn.bin",

decoderParam = "$modelDir/decoder_jit_trace-pnnx.ncnn.param",

decoderBin = "$modelDir/decoder_jit_trace-pnnx.ncnn.bin",

joinerParam = "$modelDir/joiner_jit_trace-pnnx.ncnn.param",

joinerBin = "$modelDir/joiner_jit_trace-pnnx.ncnn.bin",

tokens = "$modelDir/tokens.txt",

numThreads = 1,

useGPU = useGPU,

)

}

4 -> {

val modelDir = "sherpa-ncnn-streaming-zipformer-fr-2023-04-14"

return ModelConfig(

encoderParam = "$modelDir/encoder_jit_trace-pnnx.ncnn.param",

encoderBin = "$modelDir/encoder_jit_trace-pnnx.ncnn.bin",

decoderParam = "$modelDir/decoder_jit_trace-pnnx.ncnn.param",

decoderBin = "$modelDir/decoder_jit_trace-pnnx.ncnn.bin",

joinerParam = "$modelDir/joiner_jit_trace-pnnx.ncnn.param",

joinerBin = "$modelDir/joiner_jit_trace-pnnx.ncnn.bin",

tokens = "$modelDir/tokens.txt",

numThreads = 1,

useGPU = useGPU,

)

}

5 -> {

val modelDir = "sherpa-ncnn-streaming-zipformer-zh-14M-2023-02-23"

return ModelConfig(

encoderParam = "$modelDir/encoder_jit_trace-pnnx.ncnn.param",

encoderBin = "$modelDir/encoder_jit_trace-pnnx.ncnn.bin",

decoderParam = "$modelDir/decoder_jit_trace-pnnx.ncnn.param",

decoderBin = "$modelDir/decoder_jit_trace-pnnx.ncnn.bin",

joinerParam = "$modelDir/joiner_jit_trace-pnnx.ncnn.param",

joinerBin = "$modelDir/joiner_jit_trace-pnnx.ncnn.bin",

tokens = "$modelDir/tokens.txt",

numThreads = 2,

useGPU = useGPU,

)

}

6 -> {

val modelDir = "sherpa-ncnn-streaming-zipformer-small-bilingual-zh-en-2023-02-16/96"

return ModelConfig(

encoderParam = "$modelDir/encoder_jit_trace-pnnx.ncnn.param",

encoderBin = "$modelDir/encoder_jit_trace-pnnx.ncnn.bin",

decoderParam = "$modelDir/decoder_jit_trace-pnnx.ncnn.param",

decoderBin = "$modelDir/decoder_jit_trace-pnnx.ncnn.bin",

joinerParam = "$modelDir/joiner_jit_trace-pnnx.ncnn.param",

joinerBin = "$modelDir/joiner_jit_trace-pnnx.ncnn.bin",

tokens = "$modelDir/tokens.txt",

numThreads = 2,

useGPU = useGPU,

)

}

}

return null

}

完成上面所有的步骤之后,可以说外部所需的条件我们都已经准备完毕了。胜利就在前方,哈哈。接下来是在我自己的MainActivity中调用:

package com.qimiaoren.aiemployee;

import android.Manifest;

import android.app.Activity;

import android.app.AlertDialog;

import android.content.DialogInterface;

import android.content.Intent;

import android.content.pm.PackageManager;

import android.content.res.AssetManager;

import android.media.AudioFormat;

import android.media.AudioRecord;

import android.media.MediaRecorder;

import android.net.Uri;

import android.os.Bundle;

import android.provider.Settings;

import android.util.Log;

import android.view.View;

import android.widget.Button;

import android.widget.Toast;

import androidx.annotation.NonNull;

import androidx.core.app.ActivityCompat;

import androidx.core.content.ContextCompat;

import com.k2fsa.sherpa.ncnn.DecoderConfig;

import com.k2fsa.sherpa.ncnn.FeatureExtractorConfig;

import com.k2fsa.sherpa.ncnn.ModelConfig;

import com.k2fsa.sherpa.ncnn.RecognizerConfig;

import com.k2fsa.sherpa.ncnn.SherpaNcnn;

import com.k2fsa.sherpa.ncnn.SherpaNcnnKt;

import net.jaris.aiemployeee.R;

import java.util.Arrays;

public class MainActivity extends Activity {

private static final String TAG = "AITag";

private static final int MY_PERMISSIONS_REQUEST = 1;

Button start;

private AudioRecord audioRecord = null;

private int audioSource = MediaRecorder.AudioSource.MIC;

private int sampleRateInHz = 16000;

private int channelConfig = AudioFormat.CHANNEL_IN_MONO;

private int audioFormat = AudioFormat.ENCODING_PCM_16BIT;

private boolean isRecording = false;

boolean useGPU = true;

private SherpaNcnn model;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

initView();

checkPermission();

initModel();

}

private void initView() {

start = findViewById(R.id.start);

start.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View view) {

Toast.makeText(MainActivity.this, "开始说话", Toast.LENGTH_SHORT).show();

if (!isRecording){

if (initMicrophone()){

Log.d(TAG, "onClick: 麦克风初始化完成");

isRecording = true;

audioRecord.startRecording();

new Thread(new Runnable() {

@Override

public void run() {

double interval = 0.1;

int bufferSize = (int) (interval * sampleRateInHz);

short[] buffer = new short[bufferSize];

int silenceDuration = 0;

while (isRecording){

int ret = audioRecord.read(buffer,0,buffer.length);

if (ret > 0) {

int energy = 0;

int zeroCrossing = 0;

for (int i = 0; i 0 && buffer[i] * buffer[i - 1] > 0){

zeroCrossing ++;

}

}

if (energy = 500){

Log.d(TAG, "run: 说话停顿");

continue;

}

float[] samples = new float[ret];

for (int i = 0; i

要注意上面导入包时,部分引用是从“com.k2fsa.sherpa.ncnn”中导入的。

上面调用的核心代码就是点击start按钮,new Thread线程中的内容了。通过while循环不断读取麦克风的声音数据,并经过计算后传入模型的acceptSamples函数中获取识别文本的结果。

在线程里面我自己写了一些方法来判断说话的停顿时机,大家可以根据自己的需求来参考。

线程中最后的两个log打印日志一个是实时识别的文本内容,第二个是一句话停顿后,返回的一句完整的文本内容。一般使用isEndpoint判断中的text即可。